What

This is the second of a series of Posts where I want to show some Private and/or "Smart Home" use cases that involve Cribl.Cribl (Stream) is a Log/Data Streaming Platform that allows you to route, enrich, reduce (and more) the data that you send through it. Check cribl.io, their Docs, University and Community for more details.

Here's the first Post, about Slack Notifications.

What Exactly

For some of my Use Cases / Data sources I was looking for a free longterm storage solution. Not for big data volumes - basically for summary statistics. In this case the daily Energy Consumption and Production (Photovoltaic) in/at my house.

I don't have Splunk (or similar) 24/7 running in the Cloud or OnPrem. For midterm Analysis and Visualization I use Humio / Crowdstrike Falcon Logscale, but it only has a retention of 7 days.

Than I thought about Google Sheets. It was one of my first Cloud services and I still use it for some of my private statistics. I have sheets in my account from 2006 and they are still in good shape :) Talking about longterm...

In the past I tried to write to Google Sheets for another use case via their API and failed due to me not in the mood (or lag of skills) for the process of registering and handling the accounts, tokens and stuff around "officially" using the API.

Coincedentally I found a hassle-less way of writing to Google Sheets by (miss-)using Google Forms as an Interface

How

- Create a Google Forms questionaire with just „Text“-type answers.

- Find out the Field IDs of the answer inputs. (See hint below)

- Use them (the IDs) to build the URL in your Pipeline.

- Send „faked Form replies“ as Webhook to Google Forms.

=> Forms creates a Google Sheet with all „responses“.

Go to the "Live view" of your Forms, open Developer Tools and search in there for "entry." [string "entry" + dot "." ]

- Source

- The data that you need to send to Google Sheets needs to come from somewhere, right?

It's up to you/based on the use case. Note that it's not meant to store 5 GB of XML WinEvent Logs per day. More for summary stats like described here... - Extract/Create 1 field per "Forms Response" created earlier.

- Route

- A simple Route pointing the data from the Source to the Destination via the Pipeline

- Pipeline

- You need to parse/extract the data that you want to send and also eval some fields to construct the final URL

- Destination

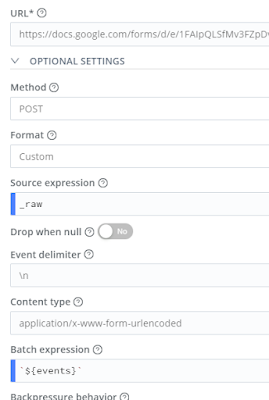

- A simple, almost empty Webhook Destination

For 3 (Pipeline) the first part is again very use case based. For the second part, to build the Webhook URL an example:

As it didn't fit in the Screenshot, here is the full __url definition of my example:

For 4 (Destination), there is nothing special needed. As mentioned earlier, the URL you configure here is not relevant as it will be overwritten with the internal __url field that we created in the Pipeline.

Conclusion

- With this setup you can store low volume/long retention time data to Google Sheets

- No need for Google API business.

- Not meant to be a big data storage, just a free and (once setup) simple way to store Statistics/Summaries.

- Feel free to comment with how you utilized this setup.

References/Links

- Record of Cribl User Group Meeting Oct. 2023 where I explain this (and some other) use case.

https://www.youtube.com/watch?v=IopYZ2JbUeE&t=1548s - Cribl Docs: Webhook Destination

Comments

Post a Comment